Get Ahead of the Curve with Bow Pod

![]()

Machine intelligence is being used today to solve many of the world’s most complex business and societal challenges –from discovering life-saving medicines to predicting stock market trends. But achieving consistently competitive AI performance at scale remains a significant challenge in the datacenter, especially for new and large models.

Graphcore’s new generation of Bow Pod systems are built from the ground up to accelerate AI performance from experimentation through to production.

Based on the Bow IPU, the first processor in the world to use Wafer-on-Wafer 3D stacking technology, Graphcore’s ground-breaking Bow Pods deliver a 40% leap forward in performance and up to 16% more power efficiency than previous generation systems. This allows AI practitioners to not only run today’s machine learning workloads faster but to more easily explore and create new types of models.

Explore, Build, and Grow with IPU-POD™>

![]()

Graphcore’s second-generation IPU-POD systems are designed to accelerate large and demanding machine learning models for flexible and efficient scale out, enabling AI-first organizations to:

- unleash world-leading performance for state-of-the-art models

- push AI application efficiency to its maximum

- and optimize total cost of ownership.

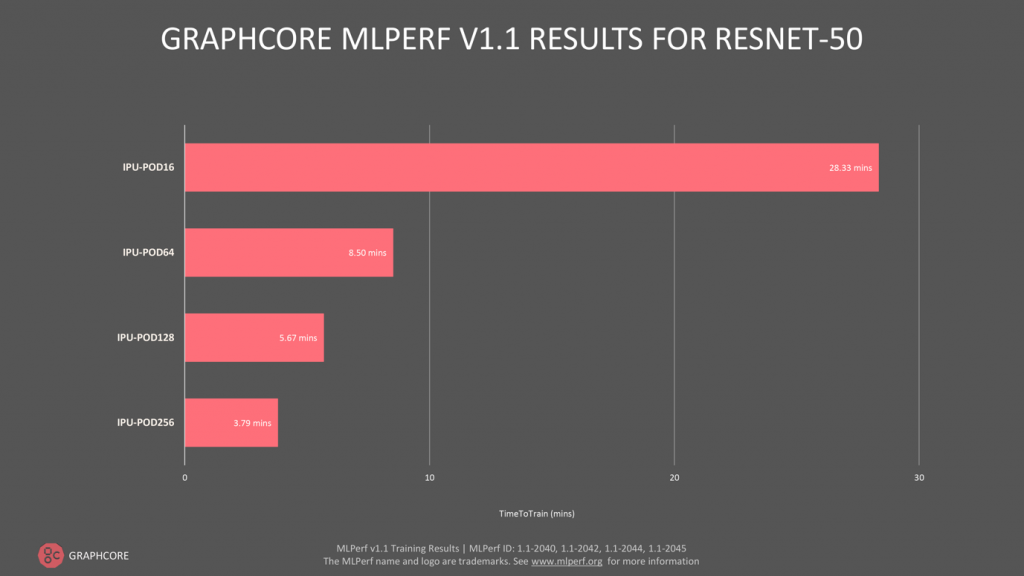

In terms of performance, Graphcore’s most recent MLPerf Training Benchmark submission demonstrates two things very clearly – IPU-POD systems are getting larger and more efficient, and Poplar’s software maturity means they are also getting faster and easier to use.

In terms of performance, Graphcore’s most recent MLPerf Training Benchmark submission demonstrates two things very clearly – IPU-POD systems are getting larger and more efficient, and Poplar’s software maturity means they are also getting faster and easier to use.

Software optimization continues to deliver significant performance gains, with ResNet-50 training on the IPU-POD16 system completed in only 28.3 minutes and just 3.79 minutes on the IPU-POD256.

Flexible Compute Customized for your Deployment Needs

![]()

Machine intelligence workloads have very different compute demands so flexibility in production deployment is critical. Optimizing the ratio of AI to host compute can help to maximize performance, while improving total cost of ownership.

Graphcore Bow Pod systems are disaggregated to enable customized compute, allowing flexible mapping of the number of servers and switches to the requisite number of IPU platforms, ensuring deployment is better tailored to production AI workloads.

Built for Straightforward Deployment and Development

![]()

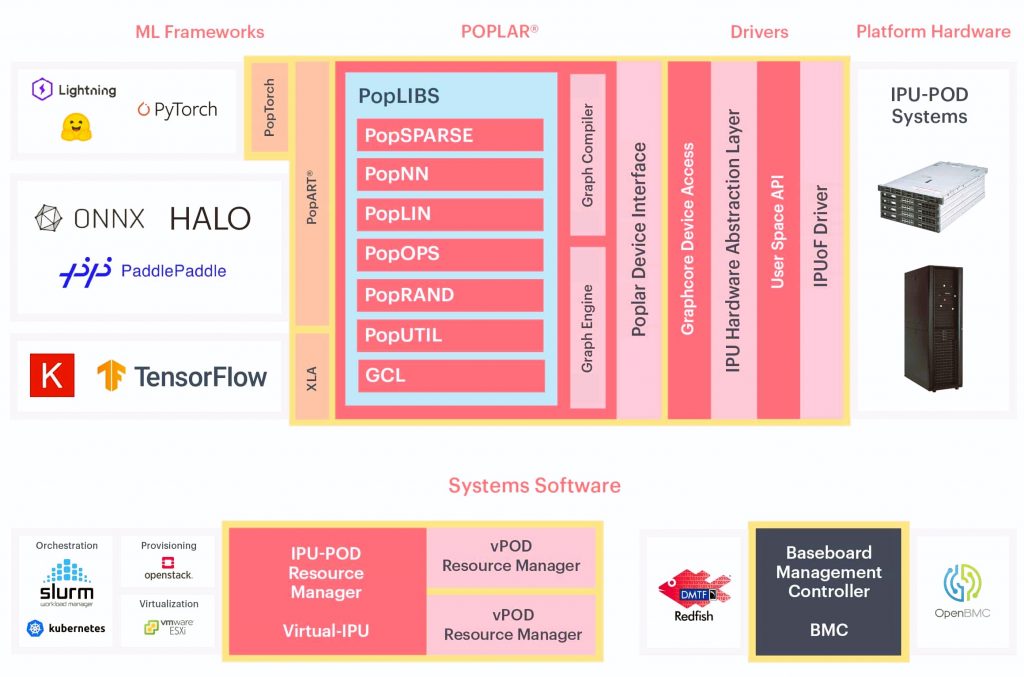

Ease of deployment has been a paramount consideration in designing Graphcore systems. The result is a solution that supports standard hardware and software interfaces and protocols and integrates effectively with existing data center infrastructures.

Graphcore’s Poplar software stack supports industry open standards and frameworks, and much of it is opensource.

For deeper control and maximum performance, the Poplar framework enables direct IPU programming in Python and C++. Poplar allows effortless scaling of models across many IPUs without adding development complexity, so developers can focus on the accuracy and performance of their application.