Executive Summary

- Deepfake is a photo manipulation technology that has been developed in an open-source model since 2018 and has been helping to commit various types of crime since its ‘amateur’ development began.

- PII is relatively easy to obtain due to the large number of data leaks over the past decade, the high demand from credit card fraudsters, and the often ‘lifetime’ nature of such a leak, as some personal data cannot be changed.

- Through technological limitations, effective detection of deepfake in use with video call is difficult, although combining verification methods along with the use of this attack scenario in penetration testing can significantly increase awareness of the threat.

Recommendations

- Try to OSINT verification candidates profiles to check his credibility, because the weak point of this method is hard to establish PII and Deepfake identity to real professional profile and work experience.

- In the case of voice or video communication on matters particularly related to finances, requested from those in top-level positions (e.g. CEOs, directors), awareness should be developed for additional verification of instructions via another communication channel.

- Consider introducing deepfake scenarios for penetration testing or internal phishing campaigns to test employees’ security awareness in practice.

Introduction

Deepfake is another kind of photo manipulation technique invented in the ‘90s, but it only existed in academic research. The revolution related to deepfake, followed an ‘amateur’ implementation that saw the light of day on Reddit by user ‘deepfakes’ in 2018. He made available an app that, based on footage associated with one person, used artificial intelligence algorithms to learn a particular face and its facial expressions to make a swap with another in order to create fake videos, which most often referred to themes of cyberviolence (fabrication of pornography, denigration videos that showed, for example, drug use). The good example of Deepfake it’s a YouTube video called “Very realistic Tom Cruise Deepfake | AI Tom Cruise” from 2021, which shows an advanced usage of this technology.

But Deepfake is not only a photo manipulation method, because another branch was born, which focus on preparing “audio fakes” based on voice samples of unique person. This functionality is a great enrichment for scams based on BEC and making trustworthy request from CEO or other high positions of some targeted organization. That is because very often a people who have high, decision-making positions, have a lot of some Social Media posts which contains their appearance/voice and could be used for craft high quality deepfake by attacker. Unfortunately, successful cases of fraud perpetrated by criminals through the use of this technique have occurred in recent times, an example being the $35 million theft of an UAE company with forged email messages and deepfake audio usage.

Currently, the new usage method has been found by many notifications from US companies, which are affected by passing interviews for positions related to remote work offers in Information Technology industry, which creates a new variant of Insider Threat based on Deepfake combining usage of leaked PII.

A new variant of Insider Threat

Looking at the revolution brought about by the Covid-19 pandemic in many spheres of life, very beneficial for cybercriminals were the changes that concerned the spread of remote work culture, which, through epidemic threats, became wildly popular, ‘opening up’ corporate networks through access from employees’ homes, which somehow lowered the security of corporate networks and influenced a huge increase in the supply of access to the networks of many companies (a particular point of interest for Ransomware gangs). Currently, the remote work trend continues and is becoming a standard in the post-pandemic era in the IT area, also due to the global view of talent in many specializations.

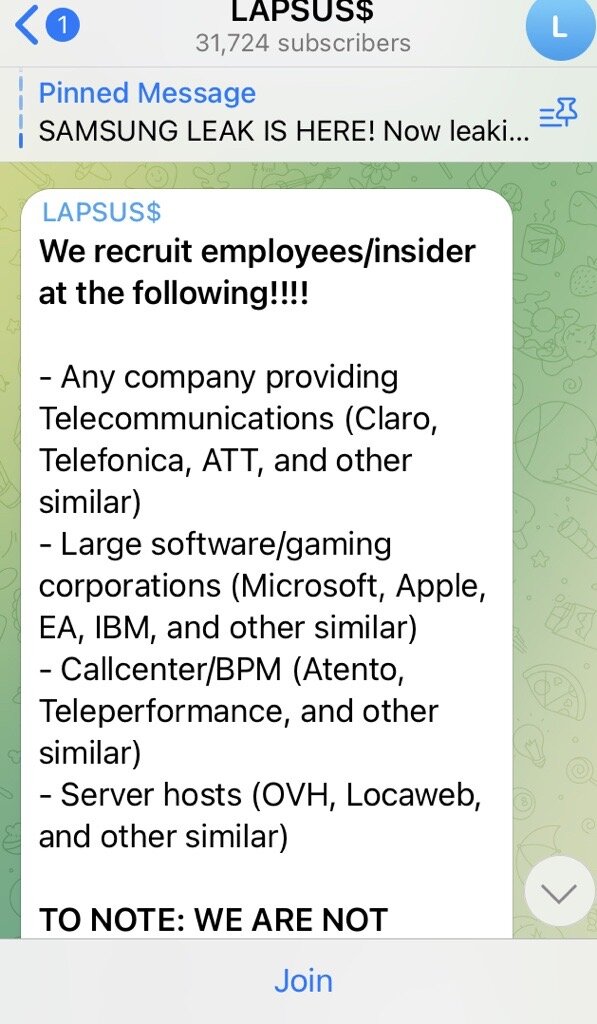

The insider threat is one of the many ways of gaining access to data or infrastructure, involving the acquisition of a company employee often in exchange for attractive financial terms, in order to learn about the company’s policies (especially security and financial), procedures and habits, which make this an effective scenario for various types of crime. In the screenshot below, we can see a specific offer aimed at the insider, published by the Lapsus$ group on their official Telegram channel:

(Source: silentpush.com)

The effectiveness of such recruitments is difficult to research, as public sources do not tell us either about the success of such recruitments or about the root cause vector in cybersecurity incidents (mainly for image reasons). Flashpoint has published an article showing the TTP’s Insider Threat and its many classifications. For an understanding of this issue, a categorization of the different types can be used:

- Accidental insiders: An insider who takes inappropriate and potentially dangerous action unknowingly and without the intent to harm. They have no motive and have not consciously decided to act.

- Negligent insiders: An insider who is not intentionally harming the organization, but has made the decision to take risky action with the hope that it will not materialize into a threat. They have no motive but have consciously decided to act inappropriately.

- Malicious insiders: An insider who knowingly takes action to harm the organization. They most likely have some motive, be it financial or related to a personal grievance, and have made the conscious decision to act on it.

New variant of insider threat is a combination of acquired PII from many sources like leaked databases, shops which specialized in identity information of real persons, and sometimes lucky OSINT findings and deepfake technology which are actively developed in open-source model, where the material for learning a specific artificial intelligence instance can be found on social media channels, video platforms, which makes this method very easy and effective.

Unfortunately, the targeting of the US is chosen by criminals because the country has a developed remote working market and is open globally to sourcing new employees. Furthermore, over the many years that credit card fraud has developed, many shops and ‘collections’ of personal data of US citizens have been created, as by the introduction of two-step verification methods such as VBV or 3DS, the credit card data alone is often not sufficient for a successful transaction. Another problem is the immutability of certain individual data linked to PII, making a leak of this kind effectively a ‘lifetime’ leak. Obviously, as this method develops, criminals will attempt to apply for positions in Europe or Asia, although at the moment there are no reports of such activity.

Regarding the open-source model used in Deepfake technology development, the GitHub repo would be a strong candidate to actively-used tool in attackers toolkit. It’s called DeepFaceLive and described functionality as “Real-time Face-swap for PC Streaming and Video Calls” makes this hypothesis very probably. As fun fact in this repo, we can see a default model which belongs to non-exist persons and at this point, this makes some idea for monitoring of public profiles in example of detection method.

Currently, there is no information on the attribution or affiliation of the threat actors using this method, although, given previous detections of the use of Artificial Intelligence with malicious intent, it is possible to distinguish threat actors from Russia, China and those using the Chinese language.

We can also, quoting the aforementioned FBI note, detail the point of interest of data or resources from the attackers’ perspective: Notably, some reported positions include access to customer PII, financial data, corporate IT databases and/or proprietary information and the job profile they are interested in, the remote work or work-from-home positions identified in these reports include information technology and computer programming, database, and software-related job functions.

Detection opportunities

In their public note the FBI described a set of detection methods based on direct analysis of video and audio, especially symptoms which occurred on using a Deepfake technology.

(Source: FBI Private Industry Notification)

Based on this image, which shows the features of facial generation using Deepfake technology, it is debatable whether the anatomical features of the interviewee can be compared due to the frequent lack of Full HD quality to analyze such details. As for background blur, it is often used by video callers, so this indicator is ambiguous.

Referring to this note, we can also find descriptions of the symptoms of using this technology, especially for certain behaviors: “In these interviews, the actions and lip movement of the person seen interviewed on-camera do not completely coordinate with the audio of the person speaking. At times, actions such as coughing, sneezing, or other auditory actions are not aligned with what is presented visually”. Although it should be noted that the lack of synchronization of sound and video can also be caused by connection problems.

It should be borne in mind that video chatting is carried out indirectly through applications such as Microsoft Teams, Google Hangouts or Zoom, where in this case we are dependent on the performance of the servers and our own connection. When we hold global meetings, the quality of these calls is often inferior to ‘local’ calls. In addition, companies that offer video conferencing software should implement mechanisms to detect the use of Deepfake technology (also based on artificial intelligence). Microsoft has already developed such a solution in 2020, which you can read about here, although it does not apply to video calls.

In terms of further recommendations that may be helpful in detecting this attack scenario, keep in mind:

- More focus on verification in OSINT sources of the candidate’s profile, as this is the weak point of this method in this case. We can obtain the image and PII of many people, but not necessarily related in reality to the profile and work experience it presents. By checking such sources for their credibility, we can largely counter attempts at fraud.

- In the case of the use of this technology in BEC-type scams, there should be an emphasis on awareness in terms of additional verification of requests, especially in the financial sphere, directly with the person who makes this contact.

- It is also worth considering scenarios involving the use of Deepfake technology in determining the scope of penetration testing, particularly against finance and HR staff. Practical familiarity with these techniques will be more effective than theoretical and often ambiguous methods. Unfortunately, in the absence of videocall software solutions, the detection mechanism can only be human.

- As mentioned earlier, monitoring default profiles used in open-source solutions can be a good method of detection due to the repeatable appearance. Unfortunately, in this case, it is an additional rule, as we are dependent on the number of publications of such profiles.

References

- https://www.bleepingcomputer.com/news/security/fbi-stolen-pii-and-deepfakes-used-to-apply-for-remote-tech-jobs/

- https://blogs.microsoft.com/on-the-issues/2020/09/01/disinformation-deepfakes-newsguard-video-authenticator/

- https://www.silentpush.com/blog/lapsus-group-an-emerging-dark-net-threat-actor

- https://www.wrike.com/remote-work-guide/trends-and-future-of-remote-work/

- https://www.ic3.gov/Media/News/2021/210310-2.pdf

- https://flashpoint.io/blog/understanding-insider-threats-recruitment-ttps/

- https://en.wikipedia.org/wiki/Deepfake

- https://github.com/iperov/DeepFaceLab

Glossary of terms

POC – Proof of Concept

TI – Threat Intelligence

PII – Personal Identifable Information

VBV – Verified By Visa (2-Factor Authentication Method)

3DS – 3-D Secure (Mastercard 2-Factor Authentication Method)