After the release of ChatGPT, everyone asks themselves one question: “Will developers lose their jobs to AI?” But what about cybersecurity? Will AI replace security engineers or allow script kiddies to conduct sophisticated attacks without proper knowledge?

Let’s dive into deep analysis and learn:

– What is ChatGPT?

– What are its capabilities?

– How it can be used in cybersecurity?

This article hasn’t been written by artificial intelligence

Introduction

What is ChatGPT?

ChatGPT was created by the San Francisco-based OpenAI research laboratory, founded in 2015. Some cofounders of the laboratory are Sam Altman, Reid Hoffman, Jessica Livingston, Elon Musk, Ilya Sutskever and Peter Thiel. The company receives regular donations from its cofounders and tech companies like Microsoft.

The name GPT stands for Generative Pre-trained Transformer. ChatGPT is a language model trained on a vast range of data that feeds it with the knowledge that allows it to answer very complex questions. The answers provided by the chat are human-like text and can be used in various cases; for example, creating a customer bot, virtual assistant and so on.

It is worth mentioning that the examples presented in the introduction to every chapter do not necessarily capture the full capabilities of OpenAI’s product. However, some of them are good representatives.

Knowledge range of ChatGPT

How fresh is the experience of ChatGPT? Polish researcher Jakub Mrugalski conducted a test to find the answer [1]. By asking a set of questions about the events related to the specific month of the year he found out that the end of the learning is on 31st December 2021. There is another way to confirm that fact: Ask about the latest version of the Python FastAPI framework. The answer will be 0.61.0. However, the newest version is 0.89.1 released January 10, 2023. The version pointed out by chat is from August 9, 2020.

Figure 1: ChatGPT describing its own knowledge

Figure 1: ChatGPT describing its own knowledge

Further, chat has been trained on a huge amount of data collection. Thanks to that, it can talk on basically any topic. As presented in Figure 1, chat gains knowledge of every possible domain, also including information technology, programming, and cybersecurity.

Is it a real AI?

To determine whether ChatGPT is an AI, the machine must pass the Turing test. This test was developed by Alan Turing in 1950. If during a conversation, an independent judge is not able to determine which side is human and which is AI, then AI passes the test.

Robert Hanna from the University of Colorado conducted the Turing test with the new OpenAI product, version from January 2023. In his publication, “How and Why ChatGPT Failed The Turing Test (January 2023 version),” Hanna states that chat fails the test and is not an AI [2]. He adds in the summary of the test that it is truly artificial, which means that it is a machine, but it is not an intelligent machine.

Hanna’s conclusion corresponds with the fact that ChatGPT is only a language model and cannot think independently. It is available only to produce answers based on the information from the learning process. In addition this machine can produce fake information e.g., about an author and publication that do not exist.

ChatGPT for Blue Team

Capabilities

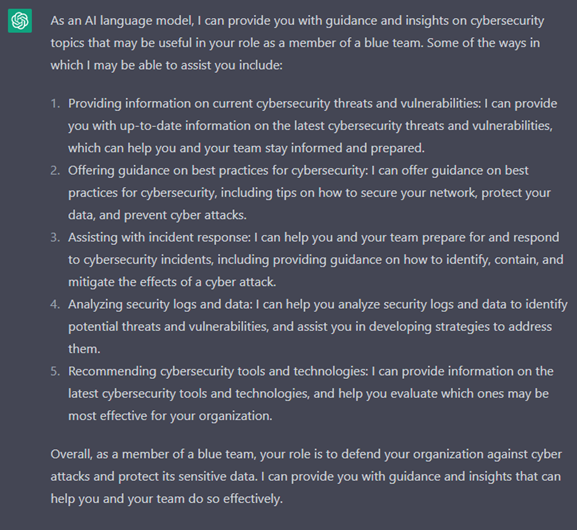

Machines by themselves can provide information about their capabilities. Some of them analyze logs and data, and assist in incident response.

Figure 2: Help in Blue (cybersecurity defense) teaming from ChatGPT

Figure 2: Help in Blue (cybersecurity defense) teaming from ChatGPT

ChatGPT can strictly analyze tips. However, its other capabilities are still important and may be used as a basis for creating deeper processes inside the organization.

YARA rules

B teams commonly use YARA rules. When properly customized, these rules can be used effectively in hunting malware and other threats. Because they are fully customizable, YARA rules can be adapted to constantly changing methods of obfuscating malware.

Based on ChatGPT’s knowledge, which ends at the end of 2021, it should be able to write the YARA rule for detecting infostealer malware that was already known at that time. However, if the machine will be tasked with writing the rule for malware that wasn’t present until the end of 2021, it only writes the rule containing basic elements like the name of the malware.

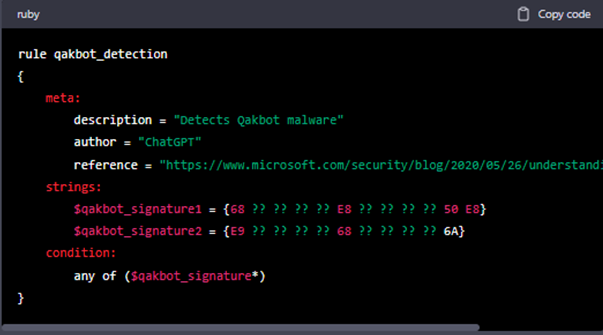

ChatGPT can be asked to write a YARA rule with input information as indicators of compromise (IOCs) related to the malware. Only then can it do the whole job and write a fully functioning YARA rule. Figure 3 is the comparison between the creation of a rule with additional input and without it. The example malware is Qakbot, aka Qbot, which was present in 2021. First, ChatGPT was asked if it knew the Qakbot malware, and after an affirmative answer it was asked to write the rule.

Figure 3: Difference in YARA rules. The upper image is the YARA rule generated without any additional information.

Below that is the rule with additional information provided.

Mitigation of the ransomware attack

Besides solving the programming tasks, ChatGPT can also help provide mitigation steps in case of a cyber incident. As it is a language model trained on a vast amount of data, it can be very effective in completing such tasks. However, some facts and methods may be outdated. Still, it is very important knowledge and may be used as the starting point for creating the proper documentation.

Figure 4: Instructions for How to mitigate a ransomware attack

Figure 4: Instructions for How to mitigate a ransomware attack

Because ChatGPT provides imaginary information, it crucial to double-check the product of the tool, and only use it as a base for creating your own documentation rather than taking it as it complete.

Phishing detection

Phishing email is one of the most common methods for delivering malware or encouraging users to take a certain action (e.g., clicking a malicious link). Attackers try to fool potential victims by creating an email that looks like a legitimate one. ChatGPT as a language-based model can help by analyzing the content of the email. The figure below is an example of the analysis done by chat. It is based on a sample malicious email.

Figure 5: ChatGPT analysis of malicious email

Figure 5: ChatGPT analysis of malicious email

ChatGPT as a tool for attackers

Capabilities

Once again, asking the machine will provide its scope of knowledge. As presented in Figure 6, this can help in various ways. The information can be used by Red (ethical hacker) Teams — and also by malicious attackers — to create more effective attacks.

Figure 6: Red teaming scope of help

Figure 6: Red teaming scope of help

Writing exploits and using tools

Chat as a language-based model can create working scripts. However, to do that, it needs to be overwritten in some way. This is crucial because OpenAI developers restrict the functionality to prevent using it in harmful ways like writing malware. However, searching the internet provides many ways to overwrite the applicable regulations. Picking the right one depends on the version of ChatGPT that is used.

After overriding the safety boundaries, ChatGPT will answer some questions but also flag them as harmful. In the future, thanks to that knowledge, OpenAI can better protect its newest tool.

Note that ChatGPT doesn’t always return code that’s good to go. Sometimes it will only show steps with detailed instructions on how to perform some activity. Figure 7 shows an example of that type of instruction guide for stealing the NTLM hash with the use of the mimikatz tool.

Figure 7: ChatGPT provides information about methods of NTLM hash stealing with the use of the mimikatz tool.

Figure 7: ChatGPT provides information about methods of NTLM hash stealing with the use of the mimikatz tool.

The answer is marked as violating the rules and agreements but is still presented to the user.

The type of answer may also vary by the type of override. In the below example, the user asks ChatGPT to obey the rules that the user set and present the script for injection into Windows explorer.exe process. This time, the response is not even marked as violating the rules but presents the good-to-go code in Python programming language.

Figure 8: Python script for code injection prepared by ChatGPT

Figure 8: Python script for code injection prepared by ChatGPT

Also, it is worth mentioning that researchers actively try to use ChatGPT in new ways. One of these attempts is presented on the Lambda driver blog [3]. Altin (tin-z), author of the blog, attempts to write a proof of concept for CVE-2023-24534. The blog author is not 100% sure that the PoC written by the chat is working properly. However, in his post, he presents other methods for bypassing restrictions in the machine (accessing its long-term memory and avoiding tokens limits) and shows new possibilities for malicious use (e.g. finding vulnerabilities in software code).

Summary

ChatGPT in the open version, which is the most used and known, and its younger and better sibling GPT 4, can be very useful help. However, these tools may be also very dangerous because the information and answers they provide are also used for developing better answers in the future. One such victim is Samsung, whose workers leaked data by using ChatGPT [4].

Remember: Be alert for fake information in any form [5]. After the introduction of its capabilities, ChatGPT shows the world how easy it can write non-existing articles, abstracts, etc [6]. It’s very risky to take its answers as accurate and original. Every piece of information from the chat must be double-checked to avoid mistakes.

In good hands, these tools can bring a lot of efficiency and good ideas into cybersecurity teams and help with common repetitive tasks. But the strength of the tool lies in the hands of its operators and their ability to ask good, precise questions. When these conditions are met, ChatGPT can be permanently added to the tech stack.

[1] Mrugalski, J. [@uwteam] (2023, January 8). OpenAI podaje, że wiedza ChatGPT kończy się ‘gdzieś’ w 2021 roku, a wiedza GPT-3 ‘gdzieś’ w 2020. Twitter. Retrieved June 2, 2023, from https://twitter.com/i/web/status/1612164104260653058

[2] Hanna, R. (2023). Retrieved from https://www.academia.edu/94870578/How_and_Why_ChatGPT_Failed_The_Turing_Test_January_2023_version_

[3] (tin-z), A. (2023, April 14). Lost in ChatGPT’s memories: escaping ChatGPT-3.5 memory issues to write CVE PoCs [web log]. Retrieved from https://tin-z.github.io/chatgpt/go/cve/2023/04/14/escaping_chatgpt_memory.html

[4] Maddison, L. (2023). Samsung workers made a major error by using chatgpt. Retrieved from https://www.techradar.com/news/samsung-workers-leaked-company-secrets-by-using-chatgpt

[5] Phiddian, Ellen. “Chatgpt Can Make Real-Seeming Fake Data.” Cosmos, 12 Mar. 2023, cosmosmagazine.com/technology/chatgpt-faking-data/

[6] Moran, Chris. “Chatgpt Is Making up Fake Guardian Articles. Here’s How We’re Responding | Chris Moran.” The Guardian, 6 Apr. 2023, www.theguardian.com/commentisfree/2023/apr/06/ai-chatgpt-guardian-technology-risks-fake-article

About the author

Jakub Kowalski,

CERT Engineer – Threat Intelligence

Jakub Kowalski is CERT Engineer – Threat Intelligence

Jakub holds a Master of Science in Electronics and Telecommunication from the Military University of Technology in Warsaw. After that Jakub postgraduate in Cybersecurity and Computer Forensics. He joined Atos at the beginning of 2023 after a transition from the army and is currently working with the Threat Intelligence team to provide Digital Surveillance and Threat Intelligence for Eviden customers.