Libérez les performances de vos applications HPC

Pendant de nombreuses années, les évolutions régulières des processeurs ont permis un gain de performance régulier et sans difficulté. Aujourd’hui, l’augmentation du nombre de cœurs de calcul, dans les CPU et dans les accélérateurs ou coprocesseurs, nécessite un véritable effort d’optimisation pour obtenir des performances maximales.

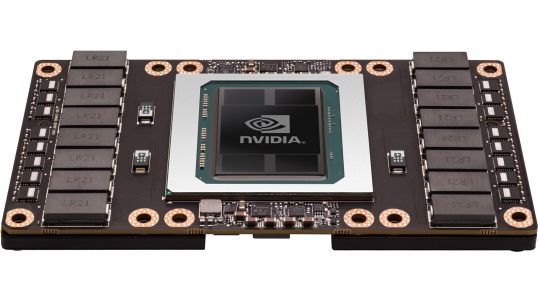

Le Centre d’Excellence (CoE) d’Atos, en partenariat avec Intel et NVIDIA, vous aide à obtenir des performances optimales et une efficacité énergétique maximale pour vos applications dans le contexte des technologies manycore. Les experts du CoE peuvent vous conseiller et vous aider à analyser, optimiser et porter vos codes.

Cela comprend, par exemple :

- des preuves de concept (POC) pour démontrer les gains de performance,

- des ateliers qui vous donnent l’occasion d’échanger avec des experts et de vous lancer dans le transfert, l’optimisation et l’accélération de vos simulations,

- des benchmarks d’applications et de solutions,

- des formations sur mesure,

- l’accès à des ressources de calcul spécifiques.

- Programme Fast Start pour que vos applications tirent le meilleur parti de vos supercalculateurs Atos dès le premier jour (portage, optimisation et configuration de vos applications bien avant la livraison du système).

Le HPC est partout

Le HPC est devenu un élément incontournable de nos vies quotidiennes, même si nous n’en sommes pas toujours conscients. Le HPC est aujourd’hui utilisé dans tous les secteurs, pour nos médicaments, pour nos téléphones portables, pour l’industrie du film, pour les équipements de nos athlètes, pour la fabrication des voitures et l’optimisation du carburant utilisé. Le HPC a un impact direct sur notre qualité de vie, rendant notre vie plus sûre, avec des prévisions météorologiques plus fiables et plus précises, une meilleure anticipation des catastrophes naturelles. Tous les secteurs de l’industrie et de la recherche s’appuient sur le calcul haute performance. Découvrez ici quelques exemples de ce que les supercalculateurs BullSequana peuvent apporter à leurs utilisateurs – et peut-être comment vos recherche ou votre activité peuvent bénéficier du HPC.

Deep Learning

Deep learning est la capacité d’un ordinateur à reconnaître des représentations spécifiques (images, textes, vidéos, sons) après qu’on lui ait montré de nombreux exemples de ces représentations. Par exemple, après avoir été présenté à des milliers de photos de chats, le programme « découvre » par lui-même quelles sont les caractéristiques spécifiques d’un chat et peut alors distinguer un chat d’un chien ou de toute autre photo.

Cette technologie d’apprentissage, basée sur les réseaux neuronaux artificiels, a révolutionné l’intelligence artificielle (IA) au cours des cinq dernières années. À tel point qu’aujourd’hui, des centaines de millions de personnes s’appuient sur des services alimentés par le Deep Learning pour la reconnaissance vocale ou faciale, la traduction vocale en temps réel ou la découverte de vidéos. Il est utilisé par exemple par Siri, Cortana et Google Now.

Le Centre d’Excellence

Le Centre d’Excellence a pour objectif d’aider les clients qui démarrent leurs projets cognitifs, en leur fournissant des recommandations sur les méthodes et algorithmes innovants pour leurs cas d’utilisation, des recommandations concernant les attentes en matière de performance des applications, et en anticipant les tendances technologiques en matière de matériel.

Le personnel du Centre d’Excellence est composé d’ingénieurs en calcul haute performance, de data scientists tels que des experts en deep learning et en NLP.

Les clients peuvent exploiter les infrastructures existantes du Centre d’Excellence, basées sur des architectures de référence éprouvées, pour tester leurs applications tout en obtenant des conseils sur la manière d’en tirer le meilleur parti. Le Centre d’excellence soutient les clients pendant les phases de validation du concept, en s’appuyant sur des formations, des webinaires, des ateliers et des services dédiés.

Atos et Deep Learning

L’équipe d’Atos travaille activement avec son partenaire technologique NVIDIA®, afin de fournir l’expertise en matière de Deep Learning, les solutions matérielles et logicielles, ainsi que les services dont vous avez besoin tout au long de la phase de développement et de production de votre projet de Deep Learning.

Service d’exploitation

Pour des raisons d’efficacité et de coûts d’exploitation, les experts d’Atos veillent à ce que vous restiez en conformité avec vos SLA.

Les équipes d’Atos peuvent gérer vos opérations jusqu’au transfert de compétences et au transfert à votre équipe. Notre service d’exploitation consiste en une surveillance à distance couplée à une gestion des événements, une gestion des incidents et des demandes des utilisateurs, une gestion des changements avec analyse d’impact et un service d’optimisation continue comprenant la gestion des problèmes, la gestion des connaissances et la documentation.

Data Center et efficacité énergétique

Il existe de nombreuses façons d’optimiser la consommation d’énergie, depuis la technologie économe en énergie « Direct Liquid Cooling » par exemple, jusqu’au système de refroidissement optimisé, en passant par la configuration des planificateurs, ou la définition d’indicateurs clés de performance énergétique pour rendre vos applications sensibles à l’énergie.

La gamme d’expertise d’Atos dans le domaine de l’efficacité des centres de données comprend l’analyse du site du client, les exigences logistiques, la conformité aux normes, l’intégration du centre de données, ainsi que la maintenance et le support.

Par exemple, pour optimiser la conception d’un centre de données, Atos peut simuler une salle informatique entière, y compris les flux d’air pour un refroidissement optimal, et modéliser les chemins de câbles et les emplacements des racks.

Formation

Dans le cadre du processus d’installation standard, Atos propose une formation sur site et personnalisée en atelier pour l’équipe d’administration du système. Atos peut également proposer sur demande une gamme de programmes de formation avancée pour les utilisateurs HPC et les développeurs HPC, qui aideront vos équipes à tirer le meilleur parti de leur supercalculateur Atos et à exécuter les opérations de manière optimale.

Les cours de formation proposés incluent le développement et l’optimisation d’applications, comment porter des applications et quelles options de compilation sélectionner, comment améliorer les performances, par exemple comment améliorer les soumissions de travaux ou comment choisir les files d’attente de messages. Certaines formations sont dédiées aux opérations et à l’administration système, à la gestion des OS et des outils (gestionnaire de lots, gestion de système de fichiers, gestion de réseau, supervision…). Un cours spécifique de gestion des données se concentre sur l’évaluation des données.

–

Supercalculateurs et la météo

Sans supercalculateurs, les prévisions météorologiques telles que nous les connaissons aujourd’hui ne seraient pas possibles. Et à mesure que la puissance de calcul à la disposition des agences météorologiques augmente, les prévisions météorologiques s’améliorent à bien des égards.

Avec les superordinateurs, les climatologues sont capables d’effectuer des simulations climatiques à une résolution plus élevée, d’inclure des processus supplémentaires dans les modèles du système terrestre ou de réduire les incertitudes dans les projections climatiques.

S’appuyer sur des prévisions météorologiques fiables pour anticiper les phénomènes sévères

Entre 1992, date à laquelle Météo-France a investi dans son premier supercalculateur, et aujourd’hui, la capacité de calcul a été multipliée par 500 000 – et Météo-France espère maintenir la même tendance à l’avenir. Les agences de prévisions météorologiques du monde entier doivent :

- émettre des prévisions toutes le heures

- utiliser une taille de maillage plus fine pour des prédictions plus précises et plus fiables

- permettre la prévision, l’emplacement exact et l’heure des phénomènes météorologiques violents

Ces objectifs nécessitent une résolution accrue du modèle et l’incorporation d’une plus grande quantité de données et d’observations dans le processus de prévision. Cela signifie plus de ressources informatiques et la capacité de gérer efficacement des données massives.

Simuler le climat à l’échelle mondiale

Atos est partenaire du Centre d’excellence en simulation météorologique et climatique en Europe (ESiWACE), un projet Horizon 2020 sur l’écosystème HPC en Europe s’appuyant sur le réseau européen de modélisation du système terrestre et le premier centre européen de prévisions météorologiques à moyen terme. . L’objectif principal d’ESiWACE est d’améliorer considérablement l’efficacité et la productivité de la simulation météorologique et climatique numérique sur des plates-formes informatiques hautes performances en prenant en charge le flux de travail de bout en bout de la modélisation du système terrestre mondial dans un environnement HPC. En outre, en ce qui concerne l’ère exascale à venir, ESiWACE établira des simulations de démonstrateur, qui seront exécutées à des résolutions abordables les plus élevées (cible 1 km). Cela donnera des informations sur la calculabilité des configurations qui seront suffisantes pour relever les principaux défis scientifiques en matière de prévision météorologique et climatique.

Le HPC pour relever le défi du changement climatique

Valérie Masson-Delmotte est climatologue française et directrice de recherche au Commissariat à l’énergie atomique et aux énergies alternatives, où elle travaille au Laboratoire des sciences du climat et de l’environnement (LSCE). Elle explique ici comment la Simulation Haute Performance peut aider à faire face aux défis du changement climatique.

CNAG : Percer les mystères de l’ADN

Ivo G. Gut, directeur du Centre espagnol de génomique (CNAG) explique comment ils analysent chaque jour plus de dix génomes humains, à l’aide des supercalculateurs d’Atos. Leur but ultime est de transformer les séquences en informations précieuses, afin d’améliorer la santé et la qualité de vie des gens.

–

Institut Pirbright : faire progresser la recherche virale et la santé animale

Lorsqu’un virus mortel apparaît, les scientifiques doivent réagir rapidement pour caractériser le virus, suivre sa propagation et l’empêcher de dévaster le bétail et éventuellement d’infecter les humains. En tant que leader mondial dans ce domaine, le Pirbright Institute au Royaume-Uni a besoin de ressources de calcul hautes performances (HPC) flexibles capables de gérer une grande variété de charges de travail.

Pirbright a déployé un supercalculateur Atos. Avec un environnement unifié exécutant ses diverses applications, Pirbright

améliore la productivité scientifique et aide les décideurs politiques à réagir efficacement lorsqu’une épidémie virale menace.

–

Boostez le transfert d’Omics dans l’environnement clinique

Pour faire face aux exigences d’une population vieillissante, le système de santé actuel doit évoluer vers un modèle durable axé sur le bien-être des patients. Cette révolution ne sera possible qu’en transférant les avancées de la recherche – de la recherche génomique en particulier – aux soins de santé au quotidien.

–

GENyO étend ses capacités analytiques pour la médecine de précision

Le Centre de recherche en génomique et oncologie (GENyO) avait besoin d’une infrastructure plus robuste pour répondre à la demande croissante d’analyses bioinformatiques et de projets à grande échelle. GENyO a sélectionné un supercalculateur Bull d’Atos. Aujourd’hui, le centre augmente sa productivité scientifique sur des projets de grande envergure qui contribueront à de nouvelles capacités de diagnostic de précision, à des percées pharmaceutiques et à des services de santé publique plus efficaces pour la région andalouse espagnole.

–

–

CIPF: tirer parti de la génomique pour de meilleurs diagnostics et traitements

Le séquençage et l’analyse du génome sont des tâches complexes qui nécessitent des plateformes d’analyse puissantes. Le temps de calcul nécessaire au séquençage a été fortement réduit ces dernières années, permettant d’augmenter considérablement la quantité de données génomiques collectées sur de grandes populations d’étude. Cela ouvre la voie à un nouveau service de santé basé sur la génomique, tirant parti d’analyses génomiques approfondies et complètes pour une médecine prédictive et personnalisée. Le défi est d’atteindre :

- un diagnostic meilleur et prédictif

- des traitements plus efficaces

- dosage personnalisé

Pour mettre en œuvre un projet aussi prometteur, l’analyse des séquences doit être disponible à l’échelle industrielle et des analyses complexes doivent être prises en charge. Cela nécessite une puissance de calcul à une échelle sans précédent.